The iMouse System – A Visual Method for Standardized Digital Data Acquisition Reduces Severity Levels in Animal-Based Studies

Maciej Laz †, 1, 6, Mirko Lampe†, 2, 6, Isaac Connor3, 6, Dmytro Shestachuk4, 6, Marcel Ludwig5, 6, Ursula Müller6, Oliver F. Strauch6, Nadine Suendermann7, Stefan Lüth8, 9, Janine Kah*, 2, 6, 8, 9

1Thaumatec Tech Group, Breslau, Poland

2IIoT-Projects GmbH, Unit Research and Development, Potsdam, Germany

3Zoneminder Inc., Toronto, Canada

4Thaumatec Tech Group, Breslau, Poland

5MesoTech, Brandenburg, Germany

6Leibniz-Insitute of Virology, Small animal facility, Hamburg, Germany

7Zoonlab, Castrop-Rauxel, Germany

8Department of Gastroenterology, Center for Translational Medicine, University Hospital, Brandenburg

9Faculty of Health Sciences Brandenburg, Brandenburg Medical School Theodor Fontane

†both authors contributed the same work

*Corresponding author: Janine Kah. Department of Gastroenterology, Center for Translational Medicine, University Hospital Brandenburg, Brandenburg Medical School, Theodor Fontane; 14770 Brandenburg an der Havel, Germany

Received: 24 October 2023; Accepted: 31 October 2023; Published: 29 December 2023

Article Information

Citation: Maciej Laz, Mirko Lampe, Isaac Connor, Dmytro Shestachuk, Marcel Ludwig, Ursula Müller, Oliver F. Strauch, Nadine Suendermann, Stefan Lüth, Janine Kah. The iMouse System – A Visual Method for Standardized Digital Data Acquisition Reduces Severity Levels in Animal-Based Studies. Journal of Pharmacy and Pharmacology Research. 7 (2023): 256-273.

View / Download Pdf Share at FacebookAbstract

In translational research, using experimental animals remains the preferred standard for assessing the effectiveness of potential therapeutic interventions, particularly when investigating physiological interactions and relationships. However, the execution of these investigations is contingent upon minimizing the impact on the well-being of the experimental animals. To evaluate the severity level of the animals, inspections were conducted routine observations, multiple times each day, visually. It is noted that these visual assessments disrupt the animals during their periods of rest, resulting in elevated stress levels, which, in turn, exacerbate the animals' burden and may consequently exert an influence on the outcomes of scientific studies. Our study examined the feasibility of implementing a digital monitoring system in a translational study conducted within IVC cages. Our objective was to determine whether a camera-based observation system could reduce manual visual inspections and whether digitally available data from this study could be utilized to train an algorithm capable of distinguishing between activities like drinking. Furthermore, we aimed to ascertain whether the system could monitor the recovery phase following experimentally induced high stress, potentially as a substitute for frequent visual inspections. Within the scope of our study, we successfully demonstrated the feasibility of integrating iMouse system hardware components into the existing IVC (Individually Ventilated Cage) racks. Importantly, we established that this system can be accessed remotely from outside the animal facility, thus facilitating comprehensive digital surveillance of the experimental subjects. Furthermore, the digital biomarkers (digitally acquired data out of the home cage) proved instrumental in training algorithms capable of analyzing the long-term drinking behavior of the animals. In summary, our work has yielded an integrated, retrofittable, and modular system that serves two critical criteria. Firstly, it enables the execution of visual inspections without disturbing the animals. Secondly, it enhances the traceability and transparency of research involving animal subjects employing digital data capture by generating digital biomarkers.

Keywords

<p>Animal-based study, Home cage monitoring, AI-driven digital biomarkers, digital biomarkers, digitalization, Machine Learning, open-source technology, Digital home cage monitoring, DHC, DHCM</p>

Article Details

1. Introduction

Experimental animals have played an indispensable role in elucidating the physiological foundations of various conditions and evaluating the efficacy of therapeutic interventions. Their significance extends to approving pharmacological products and chemical substances for clinical applications [1, 2]. While alternative approaches like organ-on-a-chip, organoids, and bioinformatic models have emerged and provided valuable insights into pharmacological interactions and the intricacies of individual organs, they fall short of replicating the complexity of living systems [3]. Consequently, animal models remain essential for comprehensive comprehension of underlying mechanisms, particularly in fields such as disease research, physiology, drug testing, and toxicity analysis [4]. These models facilitate the translation of findings from pre-clinical studies to clinical applications, thereby offering invaluable insights into disease mechanisms and potential therapeutic strategies. However, it is imperative to prioritize the welfare of these animals [5]. Societal recognition of animal welfare principles and the necessity to implement the refinement, replacement, and reduction strategies (3Rs) when designing animal experiments are well-established [3]. To mitigate the burden on experimental animals during research procedures is paramount. Refinement strategies encompassing environmental enrichment (e.g., running wheels, shelters, toys), and adjustments to handling and housing conditions (e.g., temperature, noise) aim to alleviate stress levels during experiments [6]. Routine visual inspections, a customary practice in animal research, present a unique challenge in minimizing animal burden. While monitoring experimental animals' well-being, these inspections disrupt them during their resting phases, potentially inducing heightened stress levels. Stress represents a multifaceted phenomenon encompassing physiological, behavioral, and neurobiological responses, which can detrimentally impact research outcomes [7-10].

Researchers must be aware of these stress-induced perturbations and take measures to minimize their impact on the experimental design and data analysis to ensure the reliability and validity of their findings. Given this challenge, exploring alternative methods for assessing animal well-being that minimize interference with their natural behaviors and stress levels is imperative. Technological advancements have facilitated the development of non-invasive, automated monitoring systems capable of continuous data collection without human intervention. Such approaches can significantly mitigate stress-related disruptions and enhance the precision and reliability of research results. However, recently available commercial Home-Cage Monitoring (HCM) systems are either sensor-driven or prohibitively expensive for pre-clinical research projects [11-15]. To address this gap, we have developed a non-invasive, automated monitoring system that can be seamlessly integrated into existing experimental infrastructure, reducing human influence and thereby improving data acquisition in pre-clinical studies. In this study, we assessed the functionality of the developed digital platform for monitoring, recording, and analyzing laboratory animals within their native home-cage environments. The system was implemented within an ongoing pre-clinical research project to assess its practicality and advantages in pre-clinical experiments. We conducted a comprehensive proof-of-concept, encompassing integration into the institute's infrastructure, research, and development through the testing of diverse hardware and software components. Furthermore, we evaluated the system's utility in an everyday use case: postoperative monitoring. Data acquired through this system underwent rigorous analysis via integrated software systems, a structured review process, and Machine Learning (ML) development and implementation.

2. Materials & Methods

2.1 Generation, Breeding, and Housing of mice

In this study, we employed parental female and male mice with transgenic NOD. Cg-Prkdcscid Il2rgtm1Wjl/SzJ background (Strain #:005557) from Jax Laboratory. Parental females and males were used to develop a breeding colony within the LIV animal husbandry. Mice individuals (n = 13) were observed within the study at a starting age of 10 weeks. To monitor the behavior level before and after surgery, we used mice individuals who underwent orthotopic transplantation of human liver tumor cells. The individual mice were marked and identifiable using ear punches or tail marks. Mice have been housed in individually ventilated cages (IVC) to exclude infection or transmission of infectious diseases. IVCs were changed as described in the standard operation protocol of animal husbandry.

2.1 Surgery and Recovery phase

To achieve the goal of this study, we employed animals from an ongoing pre-clinical study (authority number N56/2020). The goal was to monitor the mice before and after the transplantation of primary human tumor cells. Shortly, tumor cells were transplanted after isolation from fresh resections. The mice are anaesthetized with isoflurane in all subsequent preparatory and surgical procedures. In the first step, the animal is injected subcutaneously with the painkiller metamizole (200 mg/kg). In the next step, a hair trimmer removes the abdominal hair from the animals. The shaved area is then cleaned and disinfected with Betadine. This first cleaning takes place away from the operating table. After the onset of action of the painkiller metamizole (20-30min), the animal is now fixed on the operating table, and the abdomen is cleaned and disinfected two more times with Betadine. The operating table is tempered at 37°C to prevent the animals from cooling. A laparotomy is performed along the midline over a length of 3 cm. After imaging the left lateral lobe of the liver, intrahepatic injection of the tumor cells follows. Applying the cell solution preheated to body temperature (0,5x10E6 HCC tumor cells dissolved in sterile matrigel) is carried out in a standardized volume of 20µl utilizing a very thin injection cannula (30-gauge, 0.3 mm). Possible bleeding is quenched by absorbable hemostatic. After the closure of the muscles using a non-resorbable filament and skin using two clips, the mouse is separated from the isoflurane anaesthesia and transferred to the conventional cage (Home-cage). Postoperatively, mice receive carprofen (5mg/kg subcutaneously) every 24 hours for 72 hours. The painkiller application was stopped after 72 hours. Subsequently, the mice are examined daily to detect pathological changes. Pathological criteria include anaemia (inspection of tail colour), signs of local infection, splenomegaly (palpation), weight loss (weighing two times a week), as well as apathy and motor deficits (for example, sluggishness or pulling a limb).

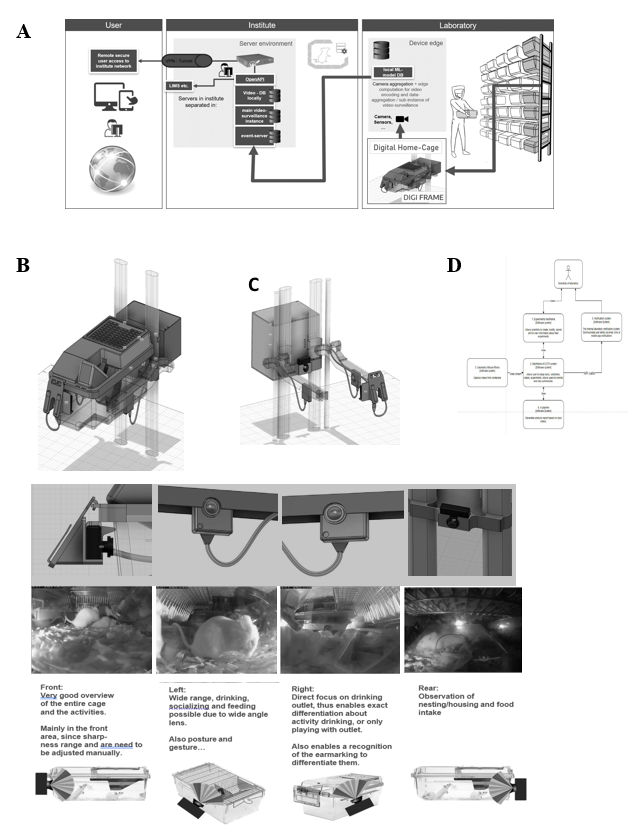

2.2 System architecture, hardware, and software

The iMouse solution is installed around existing home-cages via retrofit. Therefore, the hardware is named "DigFrame” because it encloses the home-cage (Figure 1). Our system consists of a standardized control unit per cage. This control unit is connected by 230V power and the internet (Figure 1) via LAN. Within the control unit, we install one compute unit per camera. The camera (1-4 cameras per home-cage (front, left, right, back) are integrated into internally developed housings and featured with an integrated IR filter, equipped with a wide-angle lens of a view of 120mm (Figure 1E). Cabling with a compute unit and camera is done by a home-cage-specific pre-configured cable. The camera's stem is supported by a home-cage-specific IR nightlight (920nm) to allow 24/7 remote observation (16). To ensure the field of view covers most of the home cage, we place cameras on the four sides of the cage. The focus was on easy handling, simple installation, and serviceability. Cameras were mounted at the existing housing rack, located at the guide rails, which enables a consistent view in the IVC (Figure 1A). Each camera is connected to a dedicated computing unit. The computer unit connects to the computer network via a LAN cable connection. We explicitly decided to use a scalable open-source software named ZoneMinder as the foundation to observe the mice during their recovery phase and during the experiment (Figure 2). ZoneMinder is a widely used and scalable monitoring platform system that can capture video from various camera devices and perform complex motion detection on the captured video in real-time. We developed essential functions for the project and named the widely modified platform iMouseHub platform. The employed cameras were used for real-time monitoring, recording, and motion detection when setting up zones via the iMouseHub platform (Figure 2). To record da a, we implemented scalable computer hardware. This hardware was securely integrated into the existing IT infrastructure of the customer LIV. Users can access the iMouse solution by computer (full functionality) or the mobile app via tablet or mobile phone (view mode), as described in Figure 1.

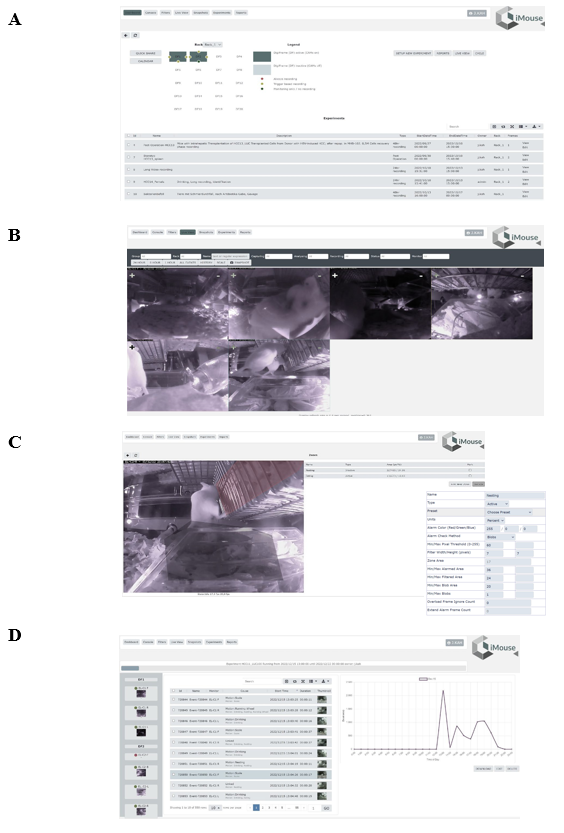

2.4 Setting up zone-based recording

For the zone-based recording, we first created zones by naming them and adapting the zone shape to the spot of interest, e.g., the drinking outlet or the food area. Depending on the size of the zone and the predictable activity within the zone, we adapted the sensitivity of detecting, the change in the visible pixels or blobs in percentage, and the method of alarm checking; both components have been used for starting the detection and recording of motions (Figure 2C). E.g. for the detection of drinking activity, we used the type active alarm pixel with a threshold of 20 (fast, high sensitivity). For the climbing activity detection, we used the active type, with the alarm check method by measuring blobs, the threshold at 40 (best, medium sensitivity). To standardize the recordings with different zones, we implemented six shades of motion detection, three by blobs and three by pixel variation.

2.5 Video recording and storage

Recordings were performed for analyzing the zone-based activity level of the animals, for AI training, and for comparative analysis within the postoperative phase (7 days) of animals undergoing a surgery procedure. The recording of short video files has been performed with a resolution of 640x480 pixels by 20 fps, and video files had a maximum length of 10 s. For storage, the LIV internal server network and the LIV cloud storage for external access were used. Process-wise, all recorded video files were stored with standardized information (time stamp): date, camera name, and cage number to ensure traceability. For security purposes, all files were accessible only to authorized persons and encrypted in the storage space for additional protection.

2.6 Video Processing by Learning Algorithms

The OpenCV framework within the Python programming language was employed to process the videos. The AI architecture relied on Pytorch, a Python framework for deep learning and processing unstructured data and consists of 3 main models: YOLOv7 for object detection, ResNet50 for behavior classification, and DeepSORT for object tracking. The first two models demand data labelling as a precondition to training and implementing them. This process is vital as it allows the models to learn from the labelled data in a supervised learning process, as shown in Figure S 3. AI. The mentioned algorithm - based on the official repo (17) - is performed frame by frame on videos with the goal of detecting the desired objects. The second o e enables tracking of the objects and differentiating between them based on the objects' previous and present positions and moves' estimations. The last algorithm is used for behavior classification based on the cropped images of mice on each video frame. The goal is to detect mice in a video and classify the behavior of each of them. The output of such a process is in both textual and visual forms. The function returns a listing of information, such as which mouse and which activity, typically in a format called JSON (e.g., "labels": ["mouse1", "mouse3"], "behaviors": ["drinking"," sleeping"], "coordinates": ["0,3 100,200", "400,100", "500,600"]). The visual result is displayed as an image with the bounding boxes and labels drawn over the original image. The textual data is inserted into the database alongside the event record to be searched for and referenced later. The visual I age is likewise stored alongside the event video for later display. Using 260 vi eo events, 130 "real" drinking, and 130 "false" events, we reached accuracy levels of 89% in the drinking training phase, as shown in Figure S 4. After that, we used the longitudinal dataset for post-operation, as described in Figure 2, to tackle the ML algorithms.

3. Results

3.1 The digital home cages (DigiFrames) provide remote digital observation.

Within the project, one of the main challenges was integrating the iMouse solution into the existing structure of animal husbandry. Here, the concerns of integrating an open-source system for the facility's users and operators had to be overcome first. For this purpose, we first developed a security concept that ensures the integrity of the research and the institute and protects sensitive and valuable data. This concept uses a VPN connection, as shown in Figure 1A. We started the implementation of the digital home cages with two DigiFrames on the right side of an ordinary rack system, which was used in an ongoing study on the LIV in Hamburg (Figure 1 A-C). Figure 1 (B and C) shows that the observation and the control unit were integrated around the home cages (DigiFrame). This kind of implementation did not change the handling processes of the facility employees or the user for the daily inspection or the changing of the cages. The control units are connected to the institute's internal IT system via a LAN connection over a dedicated switch unit. A virtual machine (VM) hosts them on the institute server; the platform (iMouseHub) is accessible and installed on the same VM. Videos recorded by the system are stored on the internal physical server, so the user can keep and review the files, including identifying metadata. The iMouse platform is accessible via the institute network or, if the user is not in the institute, via VPN, as illustrated in Figure 1A. We aimed to analyze the visibility of a monitoring system for IVC home-cages in animal husbandry. This can provide complete transparency of ongoing experiments by displaying the day and night activity of the animals in their natural habitat without disturbing. Therefore, we established the DigiFrame, as a retrofit adaptation on the IVC home-cage, regardless of the manufacturing company. The design modular DigiFrame solution is standardized and can be used for nearly all types of home-cages. Nonetheless, in this study, we show the concept of the DigiFrame representatively in one rack system, the Emerald line from Tecniplast. Figure 1E shows we included four observation units (cameras) around the IVC home-cage, with the resulting perspectives. As a result, the DigiFrame concept gives a complete view into the home-cage at any time since we also included a night vision system. According to others, we utilized a specialized 920nm light- emitting diode (LED), chosen for its imperceptibility to rodents [16].

3.2 The iMouse platform facilitates structured digitally available observation data sets

In Figure 1D, we show the simplified systems architecture. The user of the system creates the input experimentally, while the recording will be managed automatically by the system. The user can access and view the recordings and perform live observation for overall activity analysis or serenity assessment. Moreover, the recorded files were used for the Machine Learning process, and the algorithm is producing reports for AI-based analysis as described previously. After logging in, the user can perform live view, recordings, and zone-based motion detection, depending on the study and the needed dataset (Figure 1D). To handle the system, we adapted an existing, scalable, industrial- proven open-source software platform with scientifically necessary functions, to create the iMouseHub platform with an experimental focus. We implemented the possibility to allow and manage multiple user access simultaneously. After log-in as shown in Figure 2A, the user attains directly to the user-specific dashboard. Here, all experiments are listed, and the user can access the main functions of the system (Figure 2 B-D). The live view provides direct access into the observed and the user-assigned home-cages, as shown in Figure 2B. Here, all perspectives are displayed in parallel. One of the main functionalities of the software is the experiment design. Here, the rs can set up the observation period of interest by setting up the timeline and the included observation units (cameras). Since the recording over a more extended period by several observation units leads to a large data set, we implemented a more specific recording option by creating zones of interest for motion detection (Figure 2C). In Figure 2C, two created zones are displayed in red, the Eating zone, and grey, the Nesting zone. Here, the system provides different options for setting up the zones, as shown in Figure 2C, right side. Setting up the zone in the experimental setting is crucial for precise recording and preventing data waste. During a pre-study period of zone evaluation, we tested multiple conditions to determine the best options for being sensitive and accurate enough to detect all ongoing events but excluding events caused by light changes and reflections. Therefore, we implemented a pre-setup, listed in Table 1. We used the same settings for the different zones during the study. Figure 2D shows the structure of the experiment section. The upper part gives general information about the experiment, including name, duration, and user. In the expert mental section, associated observation units and their observation status are displayed as thumbnails. The recorded events are listed in the center of the experimental page, regardless of the recording method (zone-based or continuous). The events are plotted time-dependent by hour per day and duration in seconds. In Figure S1, a representative event is shown. The systems provide all event metadata information, leading to distinct traceability and transparency of all events.

Figure 1: iMouse System Integration in the Existing animal husbandry Environment. (A) Illustration of the Security and Data Management Concept. The three main pillars are the laboratory, the institute network, and the user. The registered user can access the system remotely or within the institute network. The data collection and institute network are secured for remote access by implementing an OpenVPN. (B-C) Technical drawing of the Home-cage placed in the rack and the implementation of the observation units around the cage. Drawing show ng the perspective with (B) or without (C) the Home-cage placed in the rack. Observation units are integrated at four positions: front, right, left, and rear (B, C). The observation units are connected to the Compute Unit behind the rack. The Compute nit is connected to the institute's electricity- and information network. (D) Simplified flow diagram of the iMouse system's workflow, including the system's Vision. The scientist or user will set up the experimental frame (1), while the system will provide the data [2, 3] for the scientist and the AI pipeline (4). Here is the connection to the Laboratory management system possible [5]. (E; upper pa t) showing the detailed technical drawing of the integrated cameras around the Home-cage. (E, middle part) Live view inside the Home-cage and the four perspectives of the cameras to monitor the different activity zones of the mice inside the Home-cage. (E; lower part) Representation of the four cameras and the focus on the Home-cage.

iMouseHub platform screenshot, showing the main functions necessary to set up, view and analyze experiments. (A) Displaying the Dashboard of a registered user. The available observation units (physical cameras) for the user are displayed on the upper part. The user can choose the husbandry rack and the DigiFrame used for the ongoing experiment. The observation units are shown as dots around the cage; here, the amount, the position, and the recording/ monitoring status are mentioned (Legend). The lower part of the dashboard shows the user-specific experiments, which can be viewed and edited by the user. (B) Showing the live view page of the system. The user can choose a particular DigiFrame for observation. (C). Display the Motion Detection option of the system. The user can create zones of interest from every observation unit, specifically for every experiment. The user can name and fit the zones to the area of interest. (D) Shows the Experiment page of the system. The system acquires events and collects them into an experiment folder. On the right side, the first day of an experiment is displayed, showing the zone-specific events in time on the x-axis plotted against the duration of the events in sum on the y-axis. All observation units being part of the experiment are listed on the left side. The listing here is also categorized into the DigiFrames.

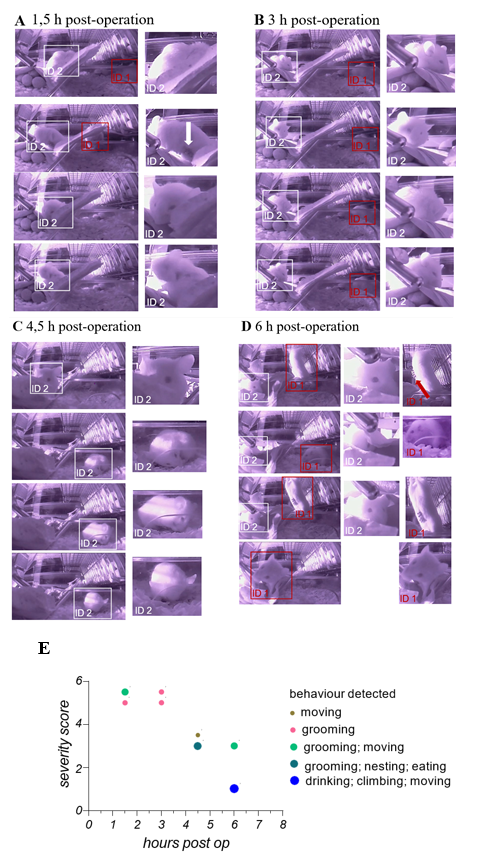

3.3 Live viewing allows intensively observing animals with high severity levels without interfering with the recovery phase of the animals

During the underlying translational study, animals were employed as a therapeutic testing model for orthotopic transplantation of liver cancer cells. That animal were manually scored directly after the operation and within the following 72 h every 6 h. This procedure is necessary to ensure the recovery of the animals. Here, we also employed the camera- based iMouse solution to realize intensive observation within 6 hours after the operation and before the first manual scoring procedure. At the start of every of the four remote observation sessions (1.5, 3, 4, 5 and 6 hours post-operation), the user starts the recording for 5 minutes. Figures 3 AD show representative pictures of one group of animals during their digital postoperative observation. Mice were observed regarding their habitus emaciation, unusual posture, limb position, and fluid losses (dehydration). 1, 5 hours post-operation, the animals show a visible slowed and laboured breathing frequency. We could observe the bristling of hair as well as their altered exploration behaviour. Mouse ID1 showed explorative behavior but slow movement and cautious gait. Mouse ID2 showed grooming activity, posture curvature of the back and narrowed eyes (Figure 3A). Wound closure metal clips were visible and in good condition. As shown in Figure 3B, both mice showed grooming activity 3 hours post-operation and exhibited features of high severity levels. Mouse ID2 showed slow and limited movement behavior. Mouse ID2 showed 4,5 hours post-operation higher movement frequencies, movement into the housing zone, regular grooming, and eating events. 6 hours post-operation, mouse ID2 showed open eyes but less movement, while mouse ID1 exerted drinking and eating as well as exploring and reaching out behavior. Metal clips were visible and in good condition. During observation, gravity conditions were normal. During the digital observation, we could acquire the main criteria of the postoperative based severity levels and the persistence of the wound closer metal clips during this time, including grooming of the animals. We summarize the information on the remote observation in the graph shown in Figure 3E. Here, we used the severity score from 5 = high to 1 = no severity, which we concluded from the captures shown in Figure 3 A-D and the underlying recorded data sets of this observation. Since we could monitor the mice in an undisturbed way, we could include more information like eating, drinking, nesting, and grooming in the graph to give a general health status. We evaluate these findings by employing another 3 groups (1x n = 2; 2x n = 3) of mice used for orthotopic transplantation and observed equal observations. After 6 hour operated animals already showed drinking, eating, nesting, and climbing behavior.

(A - D) Showing wide-angle right-side captures of the observed area in the home- cage. Here 2 mice were shown, which have been monitored and recorded 1,5; 3; 4,5- and 6- hours post-operation for 5 min in total. At the last timepoint, 6 hours post-operation, the manual observation, and the scoring of the 2 animals takes place to ensure recovery of the animals after operation procedure. Captures were collected from video material, which was recorded manually during live observation at the indicated timepoints. To identify the individual animals, they have been marks retrospective with boxes when visually detectable (ID1 = red box; ID2 = withe box). On the left side of the Figures 3 A-D captures in their original size are displayed, on the right side, close-ups present described observations of the animal’s behaviors. (E) Shows the graphical summarization of the obtained observation slots (5min each timepoint) including behavior types, general conditions status leading to the severity level score and the timeline, for both monitored mice, mentioned with ID1 and ID2.

3.4 Automated recordings via specific zones enable multiple activity analyses over time.

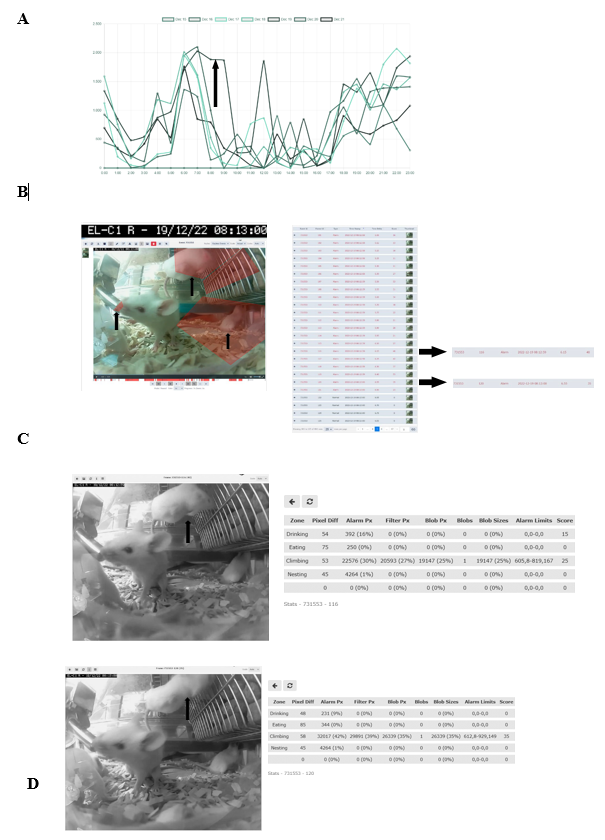

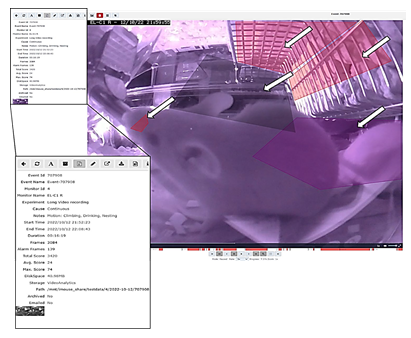

To evaluate the relevance of accessing a home-cage monitoring system like the iMouse solution during pre-clinical basic research studies, we employed another small round of 3 NSG mice used for orthotopic transplantation of liver cancer cells. The 3 female mice were hosted in the same cage for three days after maintenance and before operation. After the op ration, the mice were returned to their familiar home-cage for the wake-up phase. After the wake-up stage (20min), the mice were placed back into the same rack but into the DigiFrame slot for observation. Next, we started the pre-set-up experiment and ran the observation experiment for seven days under the same litter and enrichment conditions (no houses, no toys, no tunnel, but cellulose). The reason for the low enrichment is the usage of metal clips for wound closure after the operation. The iMouse system was set for motion-based recording of climbing, eating, drinking, and nesting zones. As shown in Figure 4A, the system recorded activity levels higher at night and lower during the day. The system summarizes the activity duration and displays those per hour and day. Therefore, Figure 4A plots a higher activity level as a longer duration. For reviewing the recording and explaining the recording modalities given by the system, we selected a single event, 731553, which was detected on the 19th of Dec. 2022 at 08:13:00 by the observation unit EL-C1 R (pointed out by the black arrow in Figure 4A). The event includes many alarm frames, which are visible in the lower timeline (red columns) and refer to multiple activity recordings. As shown in the single frame Figure 4B, all three mice are active in different zones. Therefore, the reason for motion detection is climbing, drinking, and nesting. To describe the complexity of the motion-based recording, we analyzed two single frames (ID 116 and ID 120), shown in Figures 4 C and D. Both frames overcome the threshold for setting an alert and, therefore, recording (10%). The single frame 116 scores 40 as an additional effect from the drinking zone pixel changes (16%) and the climbing zone blob changes (30%). The single frame 120 scores 35 due to the climbing zone blob change (42%). This is in line with the visible movement of the mouse in the climbing zone in frame 120 (marked by black arrows), compared to its position in frame 116, which results in the recorded blob changes of the analyzed single frame. In comparison, the mouse in the drinking zone is causing a lower change in the pixels (9%), which is below the detection limit (10%). In conclusion, distinguishing single activities for single mice is not viable when setting up multiple zones for activity recording. Nevertheless, motion drinking was part of both alert frames. As a result, the so far described method was to provide activity measurements, as plotted in Figure 4 A. This analysis was acquired without disturbing the mice during their recovery and post-operation phase. In the underlying study, animals were usually monitored every 6 hours within the first 72 hours after operation. To reduce the induced stress after the operation, it is critical to understand which amount of manual observation is needed. Our recorded dataset shows a high activity level shortly after the operation, indicating that the animals are recovering earlier from postoperative burden than expected. Here, the digital observation gives a more precise understanding of the needed manual observation.

(A) Activity raw data analysis provided from the iMouseHub platform after seven days of postoperative monitoring of one DigiFrame using motion detection. X-axis plots the hours per day; on the y-axis, the duration of the motions during the day is visualized. The days are displayed from the system in different colors and mentioned in the upper part of the graph. (B) Single f ame extraction of one motion detection plotted in A, visualized by an arrow pointing to the frame's metadata. On the right side, the single frames are listed in a table. The frame ta le gives information on the event ID, the Frame ID, the type, the time stamp, the time delta, and the score for every frame. The fundament for the motion analysis, the type, the time delta, and the score are crucial. On the right side, two single frames (Frame ID 116 (upper frame) and 120) are pointed out by black arrows. The system t pes both frames as alert frames. During the experiment, motion detection was performed for four zones (C and D; eating, drinking, nesting, and climbing). In (C), frame ID 116, and in (D), frame ID 120 are displayed, including the system's motion detection analysis raw data table (on the right). The raw data table shows the pixel differences in all four experiment zones. C shows that the system mentioned alarm pixels in the climbing (30%) and the drinking (16%) zone, giving an overall score of 40, as also mentioned in B. In D, the al rt table displays the alarm pixels in the climbing (42%) and the drinking (9%) zone, leading to an overall score of 35.

3.5 Training of Machine Learning algorithms with automatically recorded data sets provides individual identification and behavior classification.

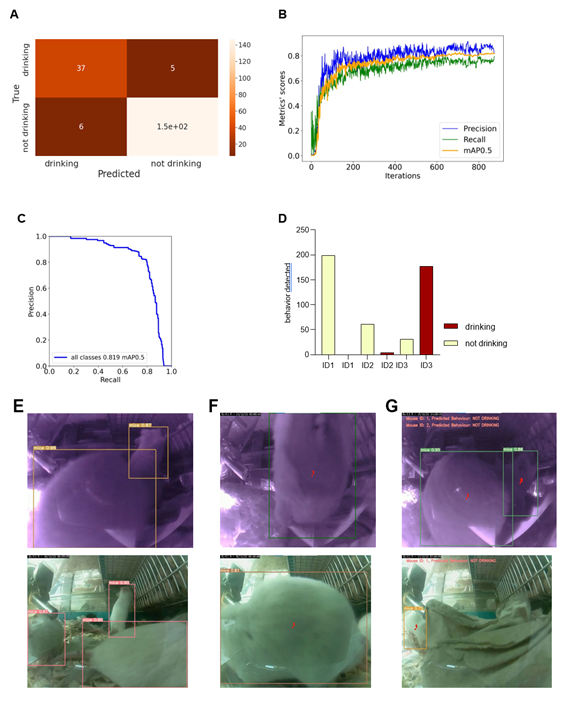

We reanalyzed the longitudinal post-operation dataset (Figure 4A) using AI models to understand the potential, advantages, and limitations of implementing machine learning algorithms within digital observation. We used ML algorithms to analyze the video files acquired by motion detection. The videos were recorded in both day and night modes. The first step was to randomly extract the drinking events from the overall activity levels of mice after the operation located in the cage for seven days. After the extraction, we divided the dataset into a training set and a validation set – during the later stages, data extracted from the specific video will not be reshuffled but will belong to the same set as the video; that would prevent data leakage between the training and the validation sets. Next, data preprocessing and labelling steps were taken as a precondition to implement the ML models, as summarized in Figure S2. Figure 5 illustrates the outcomes of the AI modelling, encompassing mice detection, behavior classification, and visual object tracking. Figure 5 is therefore divided into Metrics describing the statistical results of the modelling part, the output bar chart generated after analyzing a short video file, and the visual confirmation of the achieved results. The first part presents metrics that describe the statistical results of the modelling process. Figure 5A r presents a confusion matrix displaying the classification outcome on the validation set. This matrix provides detailed information about the classification tasks, going beyond simple accuracy measurements. For classification, we utilized 1167 cropped frames of mice, with a roughly equal distribution between daily and night modes. The dataset comprised 21% positive class images ('drinking') and 79% negative class images ('not drinking'). The training set constituted approximately 83% of the dataset, while the validation set accounted for 17%. We employed the oversampling method to balance the classes, which yielded favourable results. Ultimately, e achieved an accuracy of 94.36%, with a sensitivity metric of around 88.1% and a specificity metric of approximately 96.08%. It can be anticipated that the dataset's classes will become more balanced, and the disparity between sensitivity and specificity metrics will diminish. Figures 5 B and C display the general outcomes of the object detection modelling results. Figure 5B p presents the overall results of training the object detection model evaluated on the validation set. The chart di plays the mean average precision metric calculated at IoU (Intersection over Union) threshold amounting to 0.5 (‘mAP0.5’), precision metric, and recall metric concerning the iterations during the training process. Figure 5C p presents the precision-recall curve displaying the tradeoff between precision and recall for different threshold levels measured after the last iteration of the process of training the object detection model is completed. The precision-recall curve was built based on the validation set.

The area under the precision-recall curve yields the same result as the 'Map 0.5' measured at the end of the training process. We relied on 708 video frames for the object detection component, with a comparable number of images in both day and night modes. The training set encompassed 82% of the dataset, while the validation set constituted 18%. Our conclusions were based on the previously mentioned 'mAP 0.5' metric. We achieved an approximate mean average precision score of 0.819, enabling the successful detection of mice in daily and night modes, forming the foundation for our subsequent analyses. Figure 5D shows the output data as a bar chart. This chart displays the number of mice detections categorized by their behavior and unique ID numbers. It was generated after analyzing a short video comprising 210 frames. It displays the individual behaviours of the three mice hosted in the cage and can differentiate that mice ID1 and two were not drinking while the mouse with ID3 was drinking. Figure 5E showcases visual confirmation of mice detection. The AI algorithm generates predictions once the confidence level reaches a certain threshold (in this case, 49%). Each detection is visually demarcated by a bounding box, with the confidence level displayed in the top-left corner of the box. Figure 5F illustrates the visual confirmation of object detection and tracking. Each tracked object is represented by a red dot positioned at the center of its respective bounding box and a unique ID number adjacent to the center point. The tracking of mice relies on their previous and current positions. Finally, Figure 5G combines object detection, tracking, and behavior classification components. The unique I number of each mouse is displayed in the top-left corner of the frame, accompanied by the predicted behavior for that mouse.

Visualizations show metrics displaying the results of the modeling part (A, B, C), an example of the output data plotted in the bar chart (D), and examples of the achieved results in the form of information placed on the extracted video frames (E, F, G). (A) Confusion matrix displaying the results of the behavior classification on the validation set. Upper-left side shows true positives, the upper-right shows false negatives, the lower-left shows false positives, and the lower right shows true negatives. (B) The plot shows the precision, recall, and mAP0.5 scores during the training process in relation to the iterations. The Y-axis shows the metrics scores, and the X-axis displays the iterations. (C) Precision -recall curve determining the tradeoff between the precision and recall for different threshold levels. The Y-axis shows the precision score, and the X-axis shows the recall score. In (D), results of individual mouse drinking/not drinking behavior were plotted, displaying three mice recorded within 10s at 10 pm on the 15th of Dec. The bars represent the number of events detected in relation to the predicted behaviours on a random video. Y-axis shows the number of detections, and X-axis displays mouse ID in one cage simultaneously. In (E-G), representative visual confirmations of object detection, behavior classification, and object tracking parts are shown. Extracted frames in night mode are displayed on the upper and lower sides in day mode. (E) shows the visual confirmation of the object detection with bounding boxes around each detected object. The object’s class names and detection probabilities are displayed on the top-left side of each bounding box. (F) shows the combination of the object detection and object tracking parts. A red dot informing about the center point of each detection is placed on the center part of each detected object with the unique ID number just next to it. (G) displays the combined results of object detection, object tracking, and behavior classification. The predicted behaviour of each detected object is displayed on the upper-left side of each frame in the form of short information containing the predicted behaviour and the object's ID number.

3.6 Implementing the trained Machine Learning Algorithms enables the behavior- specific analysis of automatically recorded data sets.

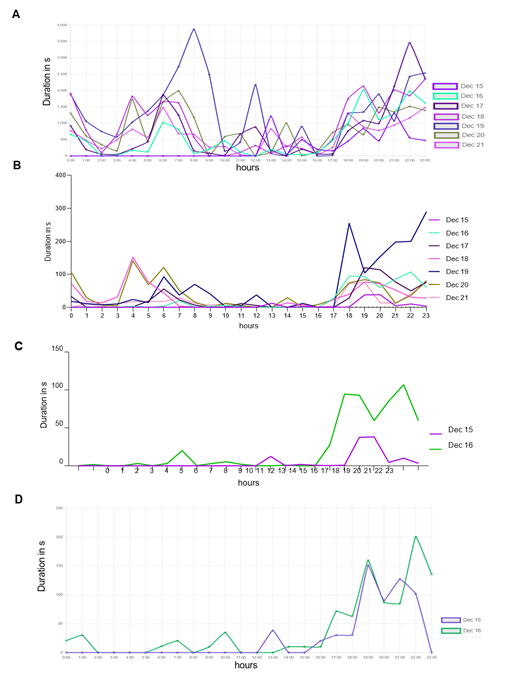

To acquire data for a specific activity from a series of zone detection experiments, we utilized machine learning (ML) algorithms. In Figure 6, we initially filtered the recorded events from the same experiment based on the "Motion: Drinking" category, and the resulting data is presented in Figure 6A. The peak observed on the 19th of Dec. (Monday) can be attributed to the weekly cage-changing activity. Since this particular analysis aimed to evaluate the accuracy of our method, we opted for a manual evaluation of drinking events. To streamline the review process, we reduced the number of events assessed from 7 to 2 days.

Figure 6D d splays the results after human experts reviewed the events. We then implemented the ML algorithms based on the events extracted from the iMouseHub platform and evaluated the results. Figure 6B d splays the performance of the ML algorithms over the period of 7 days. Then, we decided to reduce the analyzed period to compare it with the period reviewed by the human experts. Figure 6C d splays the performance of the ML methods on the period of selected two days based on the overall data extracted from Figure 6B. Then it became possible to compare the performance of the ML algorithms (6C) with the results obtained after the human experts (6D) reviewed the events. During seven days, the AI system recognized mice drinking behavior in 12.46% of the video frames that had been previously filtered using the system's "motion: Drinking" behavior filter. Additionally, the system detected mice drinking during 6.94% of the instances in which they were detected overall. The AI system confirms the peak of mice drinking activity on the 19th of Dec. Generally, mice were observed to drink most frequently during evening hours, with significantly reduced drinking activity during the day. Utilizing AI algorithms enables us to enhance the accuracy and efficiency of the analysis.

Time-depended duration of detected and recorded drinking events, spanning from the recovery phase till one week after transplantation of three mice in their Home-cage. Results are plotted in duration in seconds per recorded event against the hour. The human-based "by-hand observation" has proceeded as described in the animal experiment approval (N056/2020). (A) Detection for the drinking zone, extracted from the iMouseHub platform. (B) ML algorithms pre-analyzed data set. (C) ML pre-analyzed data set of 36h after transplantation, extracted from (B). (D) Represents the plotted data of the first 36h after transplantation reviewed in the sense of “false” and “real” event by the user.

4. Discussion

In general, the scientific community is facing increasing challenges regarding animal- based experiments; thereby, the reproducibility crisis is a primary driver. Thus, society is adjudging, in general, the employment of laboratory animals in pre-clinical and clinical research studies [14, 18, 19]. In fact, the complex interplay of metabolic pathways and organs in a living animal is crucial for valid drug testing studies. Therefore, animal-based studies can only be replaced partially [2, 20]. However, study results accomplished by conducting animal experiments have revealed fundamental issues by partially displaying a low reliability, low reproducibility, unsatisfying transparency, and a low translation rate to the findings in the human being. One reason for the given challenges is the standard way of data acquisition and interpretation [21, 22]. In pre-clinical research studies and in the pharmacological industry, monitoring and data collection are mainly performed manually by humans during the daytime, which results in non-comprehensible and subjective data sets, obtained in the non-active phase of the animals. Therefore, it is unsurprising that this kind of methodology leads to false results [23]. Here, we see a clear need for digitalization to improve and optimize the way of data acquisition, which is the fundament for data interpretation. In this regard, the defining and implementing objective and quantifiable data points (digital biomarkers) is essential [24]. A digital monitoring of experiments can fulfil the acquiring of digital biomarkers and exclude human bias's influence. Besides subjective data interpretation, a prominent aspect of the induced human bias is the continuous induction of stress by manual observation, which affects the experimental results and changes the overall outcome of a study [9].

There are camera-based systems available which can fulfil the digital monitoring as described above, but these is highly specialized solutions which are not affordable for research projects in pre-clinical approaches or appear to be self-made solutions [11, 14, 25, 26]. Due to the missing standardization of hardware and software, including the data collecting and the storage processes, it cannot be used as a fundament for an overall and scalable digitalization approach. Here, we present an affordable retrofit system for remote IVC digital home-cage observation, which can be used as a fundament to digitalize animal-based pre-clinical studies. This system provides standardized generated digital biomarkers for objective, quantifiable data, enhancing the precision and efficiency of testing experiments. Our study listed the technical details and showed the developed handling interface. This was followed by an investigation of the feasibility of the monitoring system regarding the remote live view and recording function to observe animals within an ongoing pre-clinical study. The recorded data was used to display this method's advantages and limitations and, finally, to train machine learning algorithms aiming to identify individual mice and their drinking activities during the recording. Finally, we employed the algorithms to analyze the obtained data set for drinking behaviour. As an overall result, we could show in this study that the iMouse solution can be integrated into the research environment, which has been identified as a general challenge by the community [24]. We showed that using the system by scientific staff within the institute and remotely is possible by implementing a VPN- connection. The data handling and analysis process, including associated metadata information, like the time stamps, had been generated for traceability and assignability of raw data, assignment by name, date, and time.

The digital observation platform was adapted for the study and the presented system and allows the user to set up, view and review single experiments. Various monitoring methods and use cases are conductive with the system, from manual but digital observation of the single cage via live view to recording a specific time frame. In the underlying use case, the frequency and duration of drinking events were used as digital biomarkers. To access this biomarker, we found a feasible solution by using the system's zone-based recording function. Video recording was started automatically from the system when the change of the image composition was detected in the marked zone. However, if changes in their image composition were detectable within one observation event (recording) in several zones, the system could ensure a multi-zone recording but not a single behaviour classification. Here, the advantage of Machine Learning was elucidated to achieve a single behavior classification out of a multi-zone-based recording. We showed the training and evaluation of the developed algorithms and reached an accuracy level of 94.36%, a sensitivity level of 88.1%, and a specificity of 96.08% for the use case parameter drinking. The developed object detection processing is valuable for the identification of the single mouse since we reached a precision score of 0.819 after training and validation. Through this, we could achieve a significant increase in the precision and quality of the data by ML implementation. By combining both algorithms, we could ensure the identification during a recorded event.

Further training and optimization must be done to identify mouse ID longitudinal since the algorithm must recognize a mouse with ID-specific characteristics. Therefore, we see the actual limitation of the system, which will be tackled in the following study. Nevertheless, the system's recording option and downstream analysis by ML algorithm is visible to distinguish the specific parameter out of the whole activity set and is, therefore, a valuable advantage for the underlying study since the mice can recover without the influence of the inspector, but the inspector can follow their well-being. For the first time, we showed a complete and standardized digitalization approach, which can be used as a blueprint for achieving unbiased data within the common mouse home- cage as retrofit installation. The need to collect unbiased data sets is increasingly important, especially when it comes to the approval of new drugs. Here, the failure rates of individual substances are very high, especially regarding efficacy and safety [27]. Using an unbiased pre-clinical measurement of effectiveness and safety can reduce these failure rates, as these are the foundation for initiating a clinical phase II and, ultimately, phase III trial. In refining these results, the interpretation of the results will be more precise by accumulating more valuable information, e.g., behavioural aspects and activity parameters of experimental animals during a crucial phase of the experiments. At this point, we generate large data sets, therefore seeking the next challenge, handling, and interpreting those big data sets [28]. Implementing Machine Learning and Deep Learning will give rise to the collected data set. The advantages of ML were already used in the fields of translational and medical research for screening and analyzing patient-derived data sets [29]. In genomics and neuroscience research, as well as in the prediction of zoonotic animal movement [30], ML processes are used to accomplish research hypotheses and as recently shown, to predict and classify animal motions without human interaction [31]. We believe that in pre-clinical studies, reducing human bias will improve the reliability and quality of results and increase animal welfare since the severity level of the animal's wounds is increased by the continuous induction of stress during the inspection.

Outlook

Our upcoming efforts will concentrate on three main parts. Firstly, the iMouseHub platform improvements regarding user management and functionality. The overall system optimization regarding the further development of standardized hardware (camera, night lights) and steering software focuses on scalability, data usage (processes, usage of codecs etc.), and system handling and service. Furthermore, thirdly, the increase of AI and Machine Learning accuracy, efficiency, and numbers of automatically recognized behaviors, meaning higher prediction levels using fewer data sets. On top of that, the differentiation of several animals is a criterion we are facing, especially with a focus on 3R by banning implants and sensors within the animals. Our overarching objective is to train ML algorithms using a community-based, incremental learning approach that interconnects participating laboratories and speeds up the quality of AI development. This approach aims to elevate data generation and utilization quality, accuracy, and reliability.

Study approval

Primary human cancer cells were isolated from resection patients suffering from HCC using protocols approved by the Ethical Committee of the city and state of Hamburg (PV3578) and accorded to the principles of the Declaration of Helsinki. Animals were housed under specific pathogen-free conditions according to institutional guidelines under authorized protocols. All animal experiments were conducted by the European Communities Council Directive (86/EEC) and were approved by the City of Hamburg, Germany (N056/2020).

Author Contributions

JK, ML initiated and supervised the research study; IC designed and remodulated the software, DS, ML, and ML designed the hardware. DS and ML integrated the hardware. JK, ML, MF, UM designed and conducted experiments, and JK, acquired data. IC, MF, and K analyzed data; JK, ML, NS, and OS wrote the manuscript. All authors discussed the data and corrected the manuscript.

Acknowledgments

We thank Tobias Gosau for this excellent work with the mouse colony. We thank Norbert Zangenberg and Heiko Juritzka for their IT support during the project. We thank the LIV administration for the fruitful cooperation.

Financial Supports

DFG founded the animal experiments (KA 5390/2-1). Hardware and software were financed by IIoT-Projects GmbH, private investors, and Thaumatec Tech Group.

References

- Roberts R, McCune SK. Animal studies in the development of medical countermeasures. Clinical pharmacology and therapeutics 83 (2008): 918-20.

- Mikulic M. Annual number of animals used in research and testing in selected countries worldwide as of 2020 (2021).

- Tannenbaum J, Bennett BT. Russell and Burch's 3Rs then and now: the need for clarity in definition and purpose. J Am Assoc Lab Anim Sci 54 (2015): 120-32.

- Bryda EC. The Mighty Mouse: the impact of rodents on advances in biomedical research. Mo Med 110 (2013): 207-11.

- Fenwick N, Griffin G, Gauthier C. The welfare of animals used in science: how the "Three Rs" ethic guides improvements. Can Vet J 50 (2009): 523-30.

- (DC) NRCUCoRaAoDiLAW. Avoiding, Minimizing, and Alleviating Distress: National Academies Press (US) (2008).

- Ghosal S, Nunley A, Mahbod P, Lewis AG, Smith EP, Tong J, et al. Mouse handling limits the impact of stress on metabolic endpoints. Physiology & behavior 150 (2015): 31-7.

- Dickens MJ, Romero LM. A consensus endocrine profile for chronically stressed wild animals does not exist. General and Comparative Endocrinology 191 (2013): 177-89.

- Patchev VK, Patchev AV. Experimental models of stress. Dialogues Clin Neurosci 8 (2006): 417- 32.

- Boonstra R. Reality as the leading cause of stress: rethinking the impact of chronic stress in nature. Functional Ecology 27 (2013): 11-23.

- Singh S, Bermudez-Contreras E, Nazari M, Sutherland RJ, Mohajerani MH. Low-cost solution for rodent home-cage behaviour monitoring. PloS one 14 (2019): e0220751.

- Gaburro S, Winter Y, Loos M, Kim JJ, Stiedl O. Editorial: Home Cage-Based Phenotyping in Rodents: Innovation, Standardization, Reproducibility and Translational Improvement. Frontiers in neuroscience 16 (2022): 894193.

- Voikar V, Gaburro S. Three Pillars of Automated Home-Cage Phenotyping of Mice: Novel Findings, Refinement, and Reproducibility Based on Literature and Experience. Front Behav Neurosci 14 (2020): 575434.

- Grieco F, Bernstein BJ, Biemans B, Bikovski L, Burnett CJ, Cushman JD, et al. Measuring Behavior in the Home Cage: Study Design, Applications, Challenges, and Perspectives. Front Behav Neurosci 15 (2021): 735387.

- Hobson L, Bains RS, Greenaway S, Wells S, Nolan PM. Phenotyping in Mice Using Continuous Home Cage Monitoring and Ultrasonic Vocalization Recordings. Curr Protoc Mouse Biol 10 (2020): e80.

- Newbold HG, King CM. Can a predator see invisible light? Infrared vision in ferrets (Mustela furo). Wildlife Research 36 (2009): 309-18.

- Yiu WK. Implementation of paper - YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors (2023).

- Defensor EB, Lim MA, Schaevitz LR. Biomonitoring and Digital Data Technology as an Opportunity for Enhancing Animal Study Translation. ILAR Journal 62 (2021): 223-31.

- Design preclinical studies for reproducibility. Nature Biomedical Engineering 2 (2018): 789-90.

- Hajar R. Animal testing and medicine. Heart Views 12 (2011): 42.

- Begley CG, Ellis LM. Raise standards for preclinical cancer research. Nature 483 (2012): 531- 3.

- Ferreira GS, Veening-Griffioen DH, Boon WPC, Moors EHM, van Meer PJK. Levelling the Translational Gap for Animal to Human Efficacy Data. Animals (Basel) 10 (2020).

- Polson AG, Fuji RN. The successes and limitations of preclinical studies in predicting the pharmacodynamics and safety of cell-surface-targeted biological agents in patients. British journal of pharmacology 166 (2012): 1600-2.

- Baran SW, Bratcher N, Dennis J, Gaburro S, Karlsson EM, Maguire S, et al. Emerging Role of Translational Digital Biomarkers Within Home Cage Monitoring Technologies in Preclinical Drug Discovery and Development. Front Behav Neurosci 15 (2021): 758274.

- Bains RS, Cater HL, Sillito RR, Chartsias A, Sneddon D, Concas D, et al. Analysis of Individual Mouse Activity in Group Housed Animals of Different Inbred Strains using a Novel Automated Home Cage Analysis System. Frontiers in Behavioral Neuroscience 10 (2016).

- Jhuang H, Garrote E, Yu X, Khilnani V, Poggio T, Steele AD, et al. Automated home-cage behavioural phenotyping of mice. Nature communications 1 (2010): 68.

- Arrowsmith J, Miller P. Phase II and Phase III attrition rates 2011–2012. Nature Reviews Drug Discovery 12 (2013): 569.

- Valletta JJ, Torney C, Kings M, Thornton A, Madden J. Applications of machine learning in animal behaviour studies. Animal behaviour 124 (2017): 203-20.

- Bernstam EV, Shireman PK, Meric-Bernstam F, M NZ, Jiang X, Brimhall BB, et al. Artificial intelligence in clinical and translational science: Successes, challenges and opportunities. Clin Transl Sci 15 (2022): 309-21.

- Wijeyakulasuriya DA, Eisenhauer EW, Shaby BA, Hanks EM. Machine learning for modeling animal movement. PloS one 15 (2020): e0235750.

- Marks M, Jin Q, Sturman O, von Ziegler L, Kollmorgen S, von der Behrens W, et al. Deep-learning- based identification, tracking, pose estimation and behaviour classification of interacting primates and mice in complex environments. Nature Machine Intelligence 4 (2022): 331-40.

Supplemental files

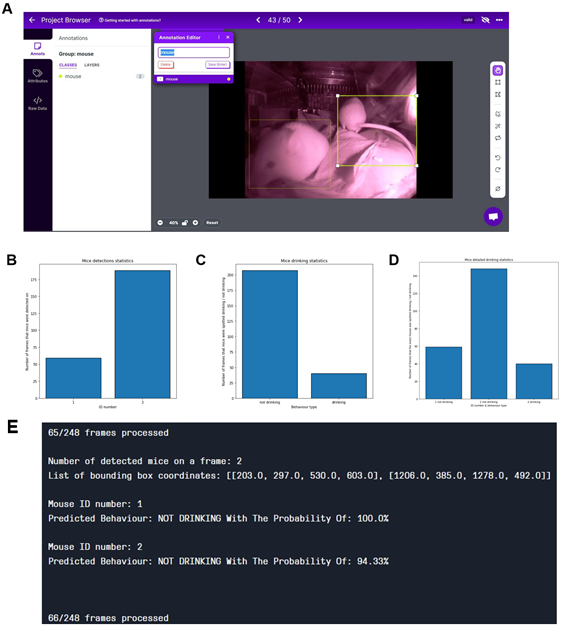

Displays a single frame of a recorded event (video file) in the Home-cage showing experiment-specific zones for, e.g., drinking, climbing, nesting, and eating, marked by white arrows. Also shown i the meta-data implementation (Name of the Observation unit, date, and time of the recording) at the top, and on the left are the properties listed bellowing to the event. The meta-data shown in the video file does allow unambiguous assignments. In the lower part, the timeline for the video is displayed. Within the t meline, the activity peaks are shown in red columns. The properties of the video, e.g., the storage path, the time, name, and duration, are uniquely assigned to the video and are shown in higher magnification in the lower left. The recorded videos can be played at different speeds to watch specific alerts. The system records the events in single frames. Therefore, the user can watch and analyze the event from frame to frame.

In (A), data labeling and annotation were displayed with CVAT and Roboflow. Most of the labeling process is based on the Roboflow framework that facilitates open-source functionalities. Roboflow itself is a developer framework focusing on Computer Vision. It enables quick and practical steps covering data collection, preprocessing, and model training techniques. (B-D) Shows the export data generated in “YOLOv7Pytorch” format—the output in the analytical form represented by the three charts. (B) The graph represents the number of frames each mouse detected on the video. (C) represents the general drinking statistics. It represents the sum of frames that all the mice were spotted drinking or not drinking. It amounts to the combination of frames representing several mice. (D) presents detailed drinking statistics. It represents the number of frames that each mouse was spotted drinking by the unique ID number of each of them. (E) represents output data for the single frame shown in (A), including the mouse ID and the probability of the behavior.

Impact Factor: * 3.3

Impact Factor: * 3.3 Acceptance Rate: 74.39%

Acceptance Rate: 74.39%  Time to first decision: 10.4 days

Time to first decision: 10.4 days  Time from article received to acceptance: 2-3 weeks

Time from article received to acceptance: 2-3 weeks