Lung Sounds Ventilation Cycle Segmentation and Classify Healthy, Asthma and COPD

Gunes Harman

Computer Engineering Department, Yalova University, Yalova, 77100, Turkiye

*Corresponding author: Gunes Harman, Computer Engineering Department, Yalova University, Yalova, 77100, Turkiye

Received: 08 January 2024; Accepted: 16 January 2024; Published: 19 January 2024

Article Information

Citation: Gunes Harman. Lung Sounds Ventilation Cycle Segmentation and Classify Healthy, Asthma and COPD. Fortune Journal of Health Sciences. 7 (2024): 13-24.

View / Download Pdf Share at FacebookAbstract

The identification of the ventilation cycle holds significant importance in clinical cases, as it provides crucial insights into respiratory patterns. Each breath- ing cycle possesses unique characteristics closely related to specific pathological information. This phenomenon plays a vital role in the accurate diagnosis of respi- ratory diseases and aids in making informed decisions regarding the overall health status of the subject. In this particular study, the classification of subjects as either healthy or pathological Asthma or COPD was performed using two popu- lar machine learning algorithms: Artificial Neural Network (ANN) and K-Nearest Neighbor (KNN). These algorithms were employed to analyze the inspiration and expiration phases of the respiratory cycle, extracting valuable information for the classification task. By leveraging the distinctive features observed during these phases, the algorithms aimed to accurately categorize individuals based on their respiratory health. The classification results obtained from the ANN and KNN algorithms were evaluated using sensitivity, specificity, and accuracy met- rics. Additionally, this study compares the actual lung sound signals that have been labeled and categorized by medical professionals (Class 1) and automatically segmented lung sound signals (Class 2). These signals are obtained through an automated segmentation process that extracts specific portions of the recorded lung sounds based on predefined criteria.

Keywords

<p>Classification, Ventilation Cycle, Lung Sounds, Machine Learning</p>

Article Details

1. Introduction

Breathing maneuvers, specifically the breathing process, or the inspiratory expiratory cycle, are based on the gas exchange principle. This results from the movement of air through the respiratory system and is caused by turbulence of the air at the level of the lobar or segmental bronchi. Lung sounds (LS) play a significant role in determining the health of the lungs. The impact of changes to the lung’s structure can also alter the sound’s characteristics. These modifications may be audible as unusual or additional sounds that are not part of the usual soundscape. When abnormalities are present or about to occur in the lungs, especially in the airway passages, pathological sounds serve as indicators of this information. These pathological sounds can provide important clues to lung diseases, and conditions. Wheeze, rhonchi, crackles, and other types of lung sounds may occur. To make an accurate diagnosis of pulmonary disease, it is essential to comprehend the changing lung mechanics [1]. Breath sounds, an essential diagnostic tool in pulmonary medicine, can be classified into two distinct categories: “normal” and” adventitious” (abnormal). Normal breath sounds are generated by healthy lungs and are characterized by an open airway, normal respiratory rhythm, normal respiration rate, and normal chest expansion and relaxation. Depending on the location where they were recorded, normal sounds are further classified as tracheal, vesicular, bronchial, or bronchovesicular. Tracheal sounds are audible over the trachea, while vesicular sounds are heard over most of the lung fields. Bronchial sounds, on the other hand, are loud and high-pitched, heard only over the manubrium, and bronchovesicular sounds are a mixture of both bronchial and vesicular sounds, heard over the bronchial tree and upper lung fields. In contrast,” adventitious” sounds are produced by unhealthy lungs and can be described as” extra or additional sounds” that are superimposed on normal lung sounds. They are usually indicative of impending abnormalities in the lungs and may signify underlying respiratory con- ditions. Adventitious sounds can be further classified into wheezes, crackles, rhonchi, and pleural rubs, among others, depending on their characteristics and location. In summary, understanding the types and characteristics of breath sounds can provide valuable insights into the health of an individual’s lungs and help in the diagnosis and treatment of respiratory disorders [2].

Auscultation is the oldest and most prevalent method of listening to body sounds to diagnose respiratory health problems. A physician or doctor uses a stethoscope to diagnose and treat respiratory illnesses in patients. Stethoscopes are used to amplify and reamplify frequencies of interest and a stethoscope is used for auscultation, which involves transforming sound into pressure waves detectable by the ear. Using a stetho- scope, physicians can detect and differentiate between normal and abnormal lung sounds, which may suggest respiratory disorders. The most crucial part of ausculta- tion is the translation of results and correct symptoms into sounds. This determines whether the general physiological structure of the lung is afflicted or functioning normally. For instance, the sound known as a” crackle” is one that is abnormal, discon- tinuous, or explosive. It occurs frequently during the inspiratory phase of respiration [3]. A wheeze is a persistent, abnormal sound heard throughout the lung during the beginning of the exhalation phase. In contrast, crackles have a broader frequency distribu- tion and occur in the range of frequency of 400 Hz to 600 Hz. Doctors heavily rely on their ability to hear and interpret unique lung sounds from the entire chest wall, one side of the chest wall, or a particular region on the chest wall during inspiration, expi- ration, or both, for diagnosing patients. Therefore, a computer-based approach is an acceptable tool for objective assessment, reducing subjective elements in the diagnosis of lung illnesses. In computer-based lung sound analysis, the patient’s lung sounds are recorded using an electronic device, followed by signal analysis using various pro-cessing techniques, such as noise reduction, filtering, up-sampling, down-sampling, and segmentation. The final and most crucially significant step is to categorize these sounds according to certain signal characteristics by utilizing various collections and combinations of distinct classifiers.

The advancement of technology, especially in the area of data processing, has led to the emergence of personal computers (PCs) and other digital devices. In the field of medical research, this development has opened up new opportunities for analyzing and classifying lung sound waves. Researchers have presented and recommended various approaches to analyzing lung sounds over the past few decades. Many studies have utilized digital signal processing and pattern recognition methods to extract important information from lung sound signals. In addition, most of the research conducted in this area has focused on using different feature extraction and selection techniques to identify the key characteristics of lung sound signals. These techniques include time- frequency analysis, wavelet transform, Fourier transform, and principal component analysis, among others. By extracting and selecting relevant features from lung sound signals, researchers can effectively reduce the complexity of the signal and improve the accuracy of the analysis. Machine algorithms or a combination of these algorithms have been widely used in the decision-making process for classifying lung sounds. Various classification techniques have been proposed, including a k-nearest neighbor, decision trees, support vector machines, artificial neural networks, and deep learning. These methods have been shown to be effective in accurately classifying lung sounds and providing valuable information for the diagnosis and treatment of respiratory disorders. In summary, the use of digital technology and advanced algorithms has greatly improved the ability to analyze and classify lung sound waves. By extracting and selecting relevant features and utilizing machine learning techniques, researchers can effectively analyze and interpret lung sound signals, providing valuable insights into the health of an individual’s respiratory system.

The study [4] has been criticized for placing excessive emphasis on the categoriza- tion of normal and abnormal breath sounds based on Linear Prediction Coefficients (LPC) and Energy Envelope characteristics. The researchers aimed to describe dis- tinct forms of breathing sounds and classify them automatically, but five of the 105 experiments conducted in their study were mistaken. Another study [5] proposed the categorization of normal and abnormal (adventitious) breath sounds using the Autoregressive (AR) model. The breath sounds were segmented, and AR analysis was performed on these segments. By categorizing AR parameters in each segment, the classification result was 87.5% for the quadric classifier and 93.75% for K-nearest utilizing the leave-one-out approach. In separate research [6], a three-category clas- sification of respiratory sounds was developed using the Fourier Spectrum (FC) for feature extraction. Comparative analysis of Competitive Neural Network vs Feed For- ward Neural Network performance revealed that up to 95% of the training vectors could be correctly categorized, and 43% of the test vectors could also be correctly categorized. In the classification approach of the research [7], respiratory sounds were categorized as healthy or asthmatic using the” Grow and Learn” Neural Network classification approach. Twenty participants’ sounds were captured; ten of them were deemed healthy, while the remaining ten were diagnosed with pathology. Utilizing wavelet coefficients, feature vectors were obtained, and the classification performance of the Grow and Learn Neural Network for asthma patients and normal people was found to be 98%. The research [8] classified lung sounds into six categories: normal, wheezing, crackling, squawking, stridor, or rhonchus, using Discrete Wavelet Trans- form (DWT) level seven as the lung sound signal feature extraction approach. The ANN classification accuracy was stated to be at a 90% level for the categorization of lung sounds. The central concept of the study [9] is the multichannel acquisition of normal and pathological lung sound categorization. Features were retrieved using the multivariate autoregressive model AR (MAR), feature vectors (FV) using SVD, and PCA for dimensionality reduction, and classification using the supervised neural network with the backpropagation method and Levenberg-Marquart adaption rule. SVD’s 20-node input and hidden layer yielded the highest performance, but PCA’s 25-node input and 10-node hidden layer produced the best results. Another study [10] reported on the classification of normal and abnormal lung sounds based on Quantile Vectors derived by Fast Fourier Transform (FFT) analysis. Lung sounds were collected from several sources, including 8 normal, 4 crackles, 7 wheezes, 4 stridor, 5 asthmatic, and 28 normal subjects from ITM. The system obtained 100 percent accuracy and validated the efficacy of Quantile Vectors for Lung Sound categorization. The research [11] identified normal and pathological lung sounds using Neural Network and Support Vector Machine (SVM) with Wavelet coefficients. Normal, wheeze, stridor, crackles, rhonchi, and squawks were the six classifications used to categorize lung sounds. The outcome of the categorization reveals that SVM was effective with an accuracy rang- ing from 93.51 to 100 percent for the classification of lung sounds. The research [12] mentions MFCC approaches for detecting and classifying breath cycles as inhale and exhale. In cycles of breathing, the timing and period of inspiration and expiration are grouped. MFCC characteristics highlighted the inhalation and exhalation variations in the frequency domain. The approach in question does not currently have any appli- cations in the real world. The primary purpose of this investigation [13] is to better define and categorize breath sounds. The average power spectrum density was utilized to represent various types of sounds. The noises were recorded from the bronchial area of the chest and categorized as inspiratory, expiratory, normal, and aberrant. The clas- sification process utilized the ANN Backpropagation algorithm. True positive results show that the research is good, but it is not suitable for long-term breath sounds.

Despite the existence of numerous studies and applications that have utilized similar methodologies, the present study aims to provide a fresh perspective on the potential of sensor recording systems in classifying lung sound signals. The primary objective of this investigation is to examine the performance of a single sensor channel in detecting lung sounds, which will be analyzed using a computer-based approach. Specifically, the study will focus on collecting lung sound signals from the right and left lobes of the lungs at specific auscultation sites, with particular emphasis on the inhalation and expiration phases. Through this approach, the study seeks to shed light on the potential of sensor-based systems in accurately classifying lung sound sig- nals, which could have significant implications for the development of more effective diagnostic tools for respiratory diseases. The classification of healthy or pathological conditions is crucial for decision-making processes in respiratory health. Each respiratory segment is a vital factor in determining than individual’s health statusTo achieve this, various approaches have been utilized in previous research. One of the methods employed is the FFT-Welch approach for power-spectral estimation, which is straight- forward and easy to understand. Another approach is the use of discrete wavelet transform to reduce the data size by resolving the frequency intervals of the respi- ratory sounds. Machine learning algorithms such as ANN and KNN are employed to further improve the decision-making process, in addition to other approaches. These algorithms help in identifying the features that distinguish healthy and pathological conditions. The use of machine learning algorithms for this purpose has been shown to be effective and accurate. Through these methods, we can determine the health status of an individual’s respiratory system and take appropriate measures to maintain or improve it.

The main objective of this paper is to present effective and practical methods for determining the breathing cycle and classifying it as either healthy or unhealthy. The study aims to make significant contributions to the field of respiratory diagnosis by using lung sounds as a full cycle of breathing in and out. The study is unique in that it focuses on the complete cycle of breathing, including both inhalation and exhala- tion. To obtain lung sounds, the researchers conducted auscultation on both the right and left lobes of the lungs at specified auscultation locations. This allows for a com- prehensive analysis of the respiratory sounds and facilitates accurate identification and classification of the breathing cycle as either healthy or pathological. By identifying effective and practical ways of determining the breathing cycle, this research can contribute to the early detection and diagnosis of respiratory conditions, potentially leading to improved patient outcomes and quality of life.

2. Materials and Methods

2.1 Sound Acquisition and Recording Information

In LS, auscultation locations are determined to provide acceptable and applicable anal- ysis. It also offers information on whether or not there are differences in the noises at various locations. Researchers have utilized a variety of auscultation locations in recent investigations such as on the lung surface [8, 14, 15] the sternum [16, 17] posterior sternum [18-20] Midclavicular lines on the right and left, as well as the manubrium sterni and the underside of the scapula, were marked off as reference points. Third, fourth, fifth, and sixth are being held by the microphone. All the lung sounds were recorded in the College of Medicine at the University of Gaziantep Hospital by volunteers who visit the lung disease clinic. The sounds were recorded in a noiseless clinical ambient room at the hospital under physician care. The Ethical approval was obtained from the College of Medicine at the University of Gaziantep Hospital from the local “Ethical Committee”. All methods were performed in accordance with the relevant guidelines and regulations.

Table 1: Distribution of subjects

|

Subject |

Male |

Female |

Smoker |

Non-Smoker |

Age Between |

|

Healthy |

16 |

9 |

7 |

18 |

24-55 |

|

Asthma |

7 |

18 |

9 |

16 |

18-63 |

|

COPD |

6 |

4 |

4 |

6 |

25-80 |

|

Total |

39 |

21 |

20 |

40 |

- |

This work is supported by the” Analysis of the Respiratory Disease Diagno- sis and Electronic Auscultation Sound Device Design” project TUBITAK under MAG104M38. Including criteria for volunteers; Volunteers or participants gave writ- ten informed consent before the recording and examination procedure. The volunteer’s age is above 18 years old. The participants are determined by the physician. Who are suitable for this study? Volunteers with normal are not found any lung sound disease. Volunteers with abnormal, patients have lung disease (Asthma or COPD). Volun- teers who attended the lung illness clinic at the University of Gaziantep’s College of Medicine contributed all of the sounds. The datasets generated and/or analyzed dur- ing the current study are not publicly available due to personal data protection law but are available from the corresponding author on reasonable request. Recordings of lung sounds were taken from volunteers breathing normally in a quiet clinical room at a hospital. Both sides of the lungs were auscultated simultaneously during inhala- tion and exhalation at the same location. Sound recordings for each volunteer were between 10 and 14 seconds. The age, gender, and smoking status distribution of the subjects can be found in Table 1. The local” Ethical Committee” granted clearance according to ethical standards. Each participant had 25 normal, 25 asthmatics and 10 COPD-related sound signals recorded. The sounds were sampled at 8 kHz with a 16-bit resolution using a Sony ECM T-150 electrets condenser type microphone with 2,200-ohm impedance and a 50-15,000 Hz response bandwidth. However, background noise and devices in contact with the skin can distort lung sound signals. Breathing sounds in healthy lungs have a frequency range that extends up to 1000 Hz, while pathological sounds such as wheezing, crackles, and other aberrant sound frequencies can reach up to 2000 Hz [21] [22]. To filter out noise sources such as heartbeat and muscle movement during the capture of lung sound signals, a 100 Hz filter is used. In previous studies, various frequency ranges and cut-off frequencies have been utilized, including 70-2000 Hz [21], 80-4000 Hz [23], 20-1200 Hz [24], 150-2000 Hz [25], 148-2000Hz [26], and 75-2000 Hz [9]. In this investigation, the recorded lung sound signal’s primary frequency range is 100 to 2000 Hz. The band-pass finite impulse response (FIR) filter was used to eliminate DC components and filter out frequencies below 100 Hz and above 2000 Hz.

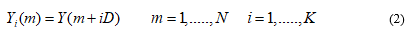

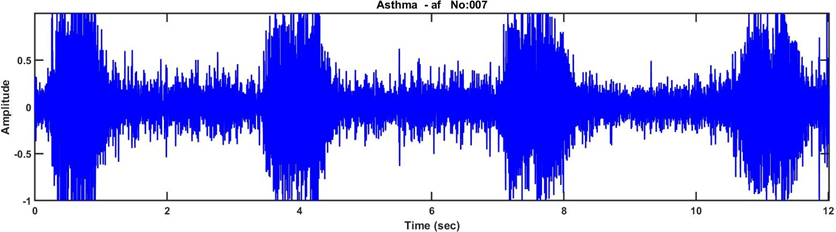

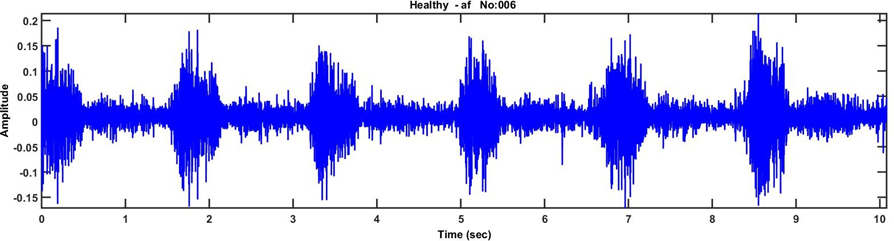

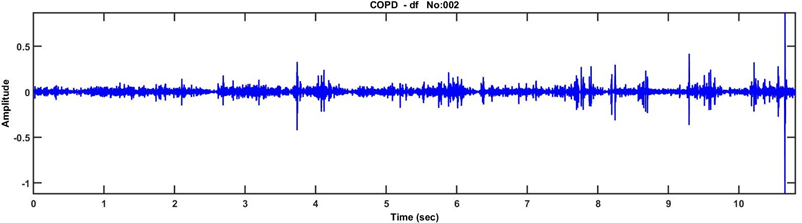

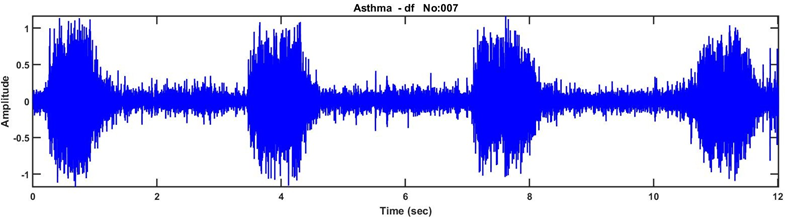

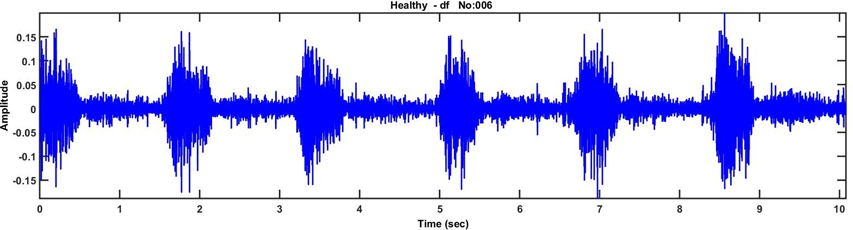

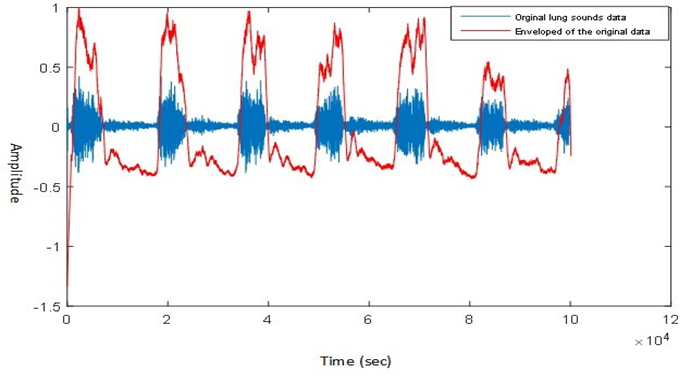

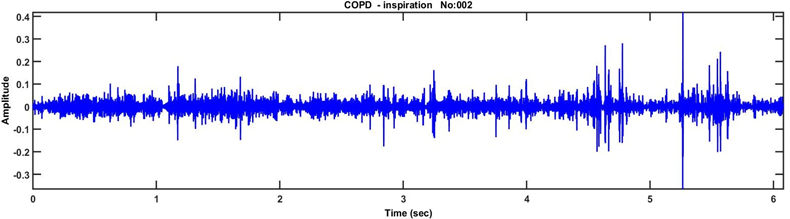

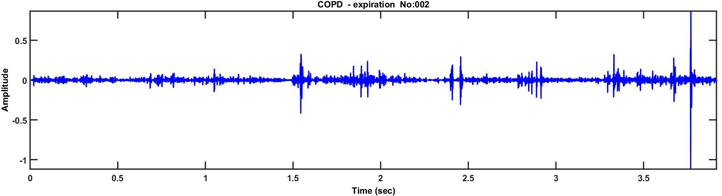

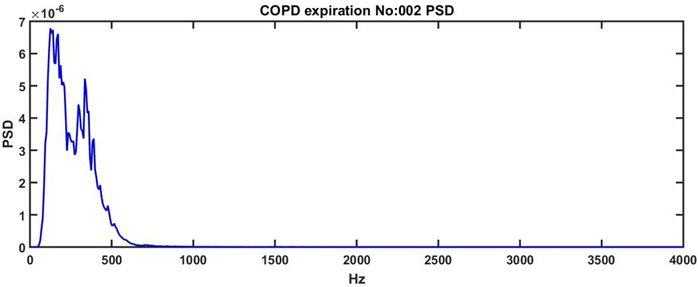

Breathing sounds in healthy lungs have a frequency range that extends up to 1000 Hz, while pathological sounds such as wheezing, crackles, and other aberrant sound frequencies can reach up to 2000 Hz [21] [22]. To filter out noise sources such as heartbeat and muscle movement during the capture of lung sound signals, a 100 Hz filter is used. In previous studies, various frequency ranges and cut-off frequencies have been utilized, including 70-2000 Hz [21], 80-4000 Hz [20], 20-1200 Hz [24], 150-2000 Hz [25], 148-2000 Hz [26], and 75-2000 Hz [9]. In this investigation, the recorded lung sound signal’s primary frequency range is 100 to 2000 Hz. The band-pass finite impulse response (FIR) filter was used to eliminate DC components and filter out frequencies below 100 Hz and above 2000 Hz. Figure 1, Figure 2, and Figure 3 displays the original time-amplitude waveform of recorded lung sounds and the waveform of healthy and diseased respiratory sound signals, Figure 4, Figure 5, and Figure 6 after band-pass filtering.

2.2 Phase Detection

The essential step for detecting the fundamental component of respiratory sounds is the detection of inspiration and expiration breathing cycle segments is the process of detecting signals to determine the overall form. Squaring eliminates energy loss of the signal in the first stage, while the electrets in the second stage receive the output of the first stage. The inspiratory and expiratory cycle lasts around 1.5 seconds, so the new sampling frequency should be at least 3 Hz. The recorded sampling frequency is 8000 Hz, and the down-sampling factor is 40, resulting in a new sampling frequency of 200 Hz. This new frequency is significantly higher than 3 Hz. The third step involves the signal being low-pass filtered to ease the processing load. Once everything is processed, the final signal structure is displayed as illustrated in Figure 7. The sound signal consists of six breathing cycles, or six inhalations and six exhalations process. The length of the lung sound signal captured is around 12 seconds. Each step is automatically located in the second part, including the baseline amplitude value Bs, the threshold value for local-maximum Max, and local-minimum Min values (e). Each peak corresponds to the midpoint and endpoint of the respiratory phase. Max denotes the time at which half of the inspiration occurs, while Min (e) represents the time at which expiration occurs. The identification of each breath starts at the beginning of the inspiration period. The code for the procedure is developed in MATLAB R2015a.

2.3 Faeture Extraction

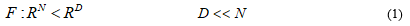

Feature extraction is a crucial step in many data processing and machine learning tasks, as it involves transforming a high-dimensional problem space into a smaller feature space as shown in Equation 1, D represents the new features which is less than N.

Essentially, this process aims to identify and extract the most relevant information or characteristics from the original data set. This is achieved through a special form of dimensional reduction and mapping, which involves reducing the dimensionality of the original data while preserving as much relevant information as possible. The result is a set of features that are identified as the most significant or unique characteristics of the data vector. These features are then used as input for various data processing and machine learning algorithms, enabling more efficient and accurate data analysis and modeling.

The Welch techniques are a class of spectral analysis methods that are based on the definition of the periodogram spectrum estimate. This estimate is obtained by transforming the signal in the time domain into a sequence of cosx or sinx waves with varying intensities, powers, amplitudes, frequencies, and phases. The resulting sequence is then used to estimate the power spectral density of the signal. The objective of the Welch method is to assess the power of the signal at various frequencies. This is accomplished by dividing the signal or data y(m) into K segments of length M, which are overlapped by D points. Each segment is then analyzed separately using Equation 2, which involves applying a window function to reduce spectral leakage and computing the periodogram using the Fast Fourier transform (FFT) algorithm.

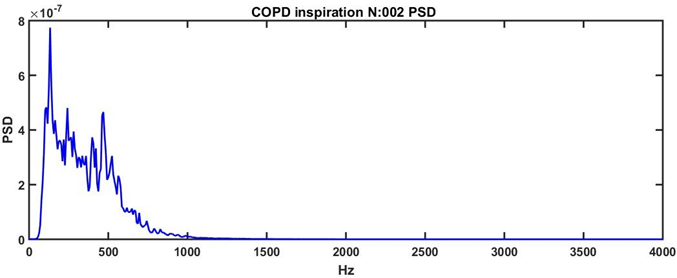

By averaging the periodograms obtained from the different segments, the Welch method provides a more accurate estimate of the power spectral density of the signal compared to the standard periodogram method. This is because the variance of the estimate is reduced by the averaging process, which helps to mitigate the effects of noise and other sources of variability in the data. Overall, the Welch techniques are widely used in many applications where spectral analysis is required, such as signal processing, acoustics, and vibration analysis, among others. They are particularly useful for analyzing non-stationary signals that exhibit changes in frequency content over time. Welch techniques are utilized to determine the power spectral density of respiratory sounds over a full cycle, encompassing both the inspiration and expiration phases, for each individual patient. In this approach, overlapping intervals are 50 rounded to the nearest, and the”Hamming” windowing algorithm is applied. Figure 8, Figure 9, Figure 10, and Figure 11 present visual representations of the lung sound signal for COPD in both their original form and after the application of Welch technique. Feature selection aims to identify a relevant subset of input features that can represent the data with minimal redundancy. In the context of signal processing, the Power Spectral Density (PSD) is a measure of the power distribution of a signal as a function of frequency. The PSD is often used as a feature to analyze and characterize signals, including respiratory sounds. In order to compute the PSD, one needs to specify the number of FFT (Fast Fourier Transform) points to use. The number of FFT points determines the frequency resolution of the resulting estimate, with higher values providing better frequency resolution at the expense of increased data size. However, when analyzing respiratory sounds, the resulting data size from PSD computation can be too large for each inhalation and exhalation breathing activity, making it difficult to process and analyze the data efficiently. To address this issue, a DWT (Discrete Wavelet Transform) decomposition rule is used to divide the frequency band into intervals of 0-2000Hz, as shown in Table 2. The DWT is a signal processing technique that decomposes a signal into a series of frequency sub-bands, allowing for a more efficient representation of the frequency content of the signal. The cut-off frequencies of the filters used in the DWT decompo- sition are designed to be one-fourth of the sampling frequency, which is a common rule of thumb in signal processing. This ensures that the resulting sub-bands capture the relevant frequency components of the respiratory sounds while minimizing the amount of redundant information.

Table 2: Frequancy band range

|

Name of Intervals |

Intervals Frequency |

|

F1 |

1000-2000 |

|

F2 |

500-1000 |

|

F3 |

250-500 |

|

F4 |

125-250 |

|

F5 |

62.5-125 |

|

F6 |

31.2-62.5 |

|

F7 |

0-31.5 |

In particular, the first five frequency intervals are selected to create feature vectors because respiratory sounds typically do not contain useful frequency and power com- ponents in low frequencies. Frequencies in intervals F6 and F7 are discarded because they are not expected to contain significant information about the respiratory sounds. This results in a more compact and informative feature representation that can be used for various applications, such as respiratory sound classification and disease diagnosis.

3. Classification Algorithms and Result

3.1 Artificial Neural Network (ANN)

In this research, the supervised error back propagation learning technique has been utilized with the Multi-Layer Perceptron (MLP) architecture, which is a well-known and effective model of artificial neural networks (ANN). The MLP architecture consists of multiple layers of nodes, each layer being fully connected to the next. The nodes in each layer are connected to all the nodes in the previous layer with weights, which are adjusted during the training process to minimize the error between the network output and the desired output. The sigmoid activation function is used on all layers of the MLP. The sigmoid function is a popular choice in neural networks because it is easy to compute and its derivative can be computed analytically, which is essential for the Back Propagation learning algorithm. The basic structure of the one hidden- layer neural network consists of three layers. The first layer is the input layer, which has all the feature vectors’ inputs. The input layer receives the feature vectors from the signal processing step and passes them to the next layer. The second layer is the hidden layer, which is responsible for processing the aggregated, weighted signal from the input nodes. The hidden layer contains a set of nodes that are not directly connected to the input or output nodes, and therefore, are called” hidden” nodes. The hidden units perform a nonlinear transformation of the input data and generate new features that are more informative for the classification task.

After the hidden units have finished processing data, they transmit it to the output neurons. Before the network generates an output, information is sent along the third layer, which is the output layer. The output layer contains the categorized data, and each output neuron corresponds to a specific class or category. The output neuron with the highest activation level indicates the predicted class for the input data. Overall, the MLP architecture with Back-Propagation learning and sigmoid activation function is a powerful tool for supervised learning tasks, such as classification and regression, and has been successfully applied to various applications in different domains.

3.2 K Nearest Neighbor (KNN)

KNN is a non-parametric algorithm used in statistical prediction and pattern recognition to classify cases based on their similarity to other cases. However, similarity metrics may not always consider attribute relationships, leading to incorrect distances and affecting classification precision. KNN uses a distance function such as Euclidean, Minkowski, or Mahalanobis Distance. If k = 1, the case is assigned to its nearest neighbor’s class. Training the nearest neighbor technique involves calculating distances between cases based on their feature set values. The nearest neighbors have the smallest distances to a given case, calculated using one of the distance methods. The KNN algorithm can be summarized as:

First, a positive integer k is specified. This value represents the number of nearest neighbors that will be used to classify a new sample. Additionally, a new sample is provided, which is the object that needs to be classified. Next, the algorithm searches through the database to find the k entries that are closest to the new sample. These entries are identified based on their similarity to the new sample, which is determined using the chosen distance function. Once the k closest entries have been identified, the algorithm determines the most common classification among them. This is achieved by tallying the classifications of the k entries and selecting the one that appears most frequently. Finally, the algorithm assigns the same classification to the new sample as the most common classification found in the k closest entries. This is the final output of the KNN algorithm for that new sample. The model is simple, easy, and can perform well on a certain dataset. However, it requires large memory storage, and the chosen value of k can significantly affect the algorithm’s performance. During the classification process, two algorithms, Arti- ficial Neural Network (ANN) and K-Nearest Neighbors (KNN), are employed in the decision-making step. Their primary purpose is to determine whether the health sta- tus of a subject should be categorized as healthy or pathological. The features relevant to the classification task are divided into two distinct groups to accomplish this.

3.3 Result

To evaluate the performance of the classification algorithms, the research includes both a training phase and a testing phase. In the training phase, the algorithms learn from the provided data to understand the patterns and relationships between the features and the corresponding health statuses. The testing phase is designed to assess the accuracy and generalization ability of the trained models on unseen data. To ensure an unbiased evaluation, the data used for categorization is evenly split between the training and testing sets. Specifically, 30% of the data is allocated for testing, while the remaining percentage is utilized for the learning process. This bal- anced distribution allows for robust assessment and comparison of the algorithms’ performance. In this particular research study, the effectiveness of both ANN and KNN algorithms is compared in their ability to classify lung sound signals as either diseased or healthy. The Fast Fourier Transform (FFT) Welch spectral technique per- forms the classification task. This technique leverages frequency domain analysis to extract relevant features from the lung sound signals, enabling the algorithms to dis- cern patterns indicative of diseased or healthy states. The research aims to determine which algorithm, ANN or KNN, exhibits superior classification performance in this specific context. Table 3 and Table 4 provide detailed information on the classification performance achieved by both classification algorithms for each individual breathing cycle.

Table 3: The confusion matrix, sensitivity, and specificity values were calculated using the FFT- Welch approach and ANN algorithm for both right and left baseline inhalation-exhalation sounds.

|

Class 1 |

Class 2 |

||||

|

Phase |

Sensitivity |

Specificity |

Sensitivity |

Specificity |

|

|

Right Basal |

Inspiration |

92 |

74 |

71 |

92 |

|

Expiration |

72 |

73 |

68 |

72 |

|

|

Left Basal |

Inspiration |

96 |

73 |

70 |

71.8 |

|

Expiration |

70 |

72 |

72 |

82.6 |

|

Table 4: The confusion matrix, sensitivity, and specificity values were calculated using the FFT- Welch approach and KNN algorithm for both right and left baseline inhalation-exhalation sounds.

|

Class 1 |

Class 2 |

||||

|

Phase |

Sensitivity |

Specificity |

Sensitivity |

Specificity |

|

|

Right Basal |

Inspiration |

70 |

82 |

97 |

71.4 |

|

Expiration |

72.1 |

81.7 |

85 |

85.7 |

|

|

Left Basal |

Inspiration |

88 |

89.2 |

91.4 |

90 |

|

Expiration |

72.1 |

82.2 |

94 |

73 |

|

These tables present comprehensive data, allowing a thorough analysis of the algorithms’. effectiveness in accurately categorizing the given dataset based on different breathing patterns. The classification performance metrics are reported for each specific breath- ing cycle, providing a comprehensive overview of the algorithms’ performance across various scenarios. By examining these tables, gain valuable insights into the strengths and weaknesses of the classification algorithms in relation to different breathing patterns, enabling further investigation and refinement of the algorithms’ capabilities. Table 3 and Table 4 Class 1 represents the category of actual lung sound sig- nals that have been labeled and categorized by medical professionals. These signals are derived from real-world recordings of lung sounds obtained during various clini- cal scenarios. They serve as a reliable reference for evaluating the performance of the classification algorithms, as they accurately depict the true characteristics and pat- terns of lung sounds as identified by experienced doctors. On the other hand, Class 2 refers to automatically segmented lung sound signals. These signals are obtained through an automated segmentation process that extracts specific portions of the recorded lung sounds based on predefined criteria. The segmentation algorithm sep- arates the lung sound signals into distinct components, allowing for further analysis and classification. Class 2 signals are generated using computational techniques and provide an alternative perspective on the lung sound data, enabling comparisons and assessments of the classification algorithms’ ability to accurately categorize the auto- matically segmented segments. By distinguishing between Class 1 and Class 2 signals in Table 3 and Table 4, can evaluate the algorithms’ performance in handling both well-defined, expert-labeled lung sound signals and automatically segmented signals. This comparison helps assess the algorithms’ robustness and generalizability, ensuring their effectiveness across different types of lung sound data and enhancing their potential for real-world clinical applications. The specificities and sensitivities obtained from the ANN and KNN classification algorithms are presented in Table 3 and Table 4. Table 5 and Table 6 compare the accuracy range for Class1 and Class2.

Table 5: Actual LS signals (Class 1) classification accuracy

|

Algorithm |

Inspiration Cycle |

Expiration Cycle |

|

Right Basal ANN |

81.6 |

70 |

|

KNN |

90.4 |

92.4 |

|

Left Basal ANN |

80 |

70.1 |

|

KNN |

93.7 |

82 |

Table 6: Automatically segmented LS signals (Class 2) accuracy

|

Algorithm |

Inspiration Cycle |

Expiration Cycle |

|

Right Basal ANN |

80 |

89.2 |

|

KNN |

71.2 |

62 |

|

Left Basal ANN |

75.3 |

69 |

|

KNN |

81.7 |

72.7 |

4. Discussion

The detection and analysis of lung sounds, also known as respiratory sounds, play a crucial role in identifying abnormalities in breathing patterns. In clinical cases, accurately identifying the ventilation cycle provides essential information for medical professionals. Each breathing cycle exhibits distinct characteristics that are associated with specific pathological information. This phenomenon holds great significance in the diagnosis of respiratory diseases and aids in making informed decisions regarding the health condition of the individual under examination. In this study, the analysis of lung sound signals was conducted to investigate respiratory patterns. Specifically, segments of the inhalation and exhalation phases were obtained from the basal regions of both the left and right lungs at designated auscultation points using sensors. These lung sound signals were digitized and transmitted to a computer for further processing. To ensure data accuracy, the signals were filtered to eliminate external and internal noise. Additionally, the respiratory sound signals were segmented into separate res- piratory activities, such as inspiration and expiration, using an enveloping method. Next, a feature extraction method was applied to transform the raw inspiratory and expiratory sound data into parametric features. This process enabled the conversion of the complex sound signals into representative numerical features that capture essen- tial characteristics of the respiratory patterns. Subsequently, the obtained features were used to classify the sound signal data into healthy or pathological (unhealthy) categories. To analyze the lung sound signals, the power spectrum of each segment, including the inspiration and expiration phases, was computed using the FF Welch method. This spectral analysis helped to identify and quantify the frequency com- ponents present in the respiratory sound signals, providing further insights into the underlying characteristics of the lung sounds. For the classification task, two machine learning algorithms, namely Artificial Neural Networks (ANN) and K-Nearest Neigh- bors (K-NN), were employed. Through experimentation, it was determined that the K-NN classification algorithm yielded the best performance for both the actual lung sound signals obtained from medical experts and the automatically segmented lung sound signals. Notably, the K-NN algorithm demonstrated superior accuracy, partic- ularly during the inhalation respiratory phase. The findings of this study highlight the efficacy of the applied methodology in distinguishing between healthy and patho- logical lung sound signals. By leveraging machine learning techniques and advanced signal processing methods, this research contributes to the development of reliable and effective tools for diagnosing respiratory diseases and assessing the health status of individuals based on their lung sound characteristics.

5. Declarations

Some journals require declarations to be submitted in a standardized format. Please check the Instructions for Authors of the journal to which you are submitting to see if you need to complete this section. If yes, your manuscript must contain the following sections under the heading ‘Declarations’:

Funding: This work is supported by the” Analysis of the Respiratory Disease Diag- nosis and Electronic Auscultation Sound Device Design” project TUBITAK under MAG104M38.

There is No Conflict of interest

Ethics approval: Ethical approval was obtained from the College of Medicine at the University of Gaziantep Hospital from the local “Ethical Committee”.

Availability of data and materials: The datasets generated and/or analyzed during the current study are not publicly available due to personal data protection law but are available from the corresponding author upon reasonable request.

Code availability

Authors Contributions

References

- Andr`es E, Hajjam A, Brandt C. Advances and innovations in the field of auscultation, with a special focus on the development of new intelligent commu- nicating stethoscope systems. Health and Technology 2 (2012): 5–16.

- Sovijarvi aRa, Dalmasso F, Vanderschoot J, Malmberg LP, Righini G. Definition of terms for applications of respiratory sounds. Eur Respiratory Rev 10 (2000): 597–610.

- Chapter 16 https://www.freezingblue.com/flashcards/print{ }preview.cgi? cardsetID=263816

- Cohen A, Landsberg D. Analysis and automatic classification of breath sounds. Technical report (1984).

- Sankur B, Kahya YP, Gulert EC, Engin T. Comparison of AR-based algo- rithms for respiratory sounds classification. Computers in Biology and Medicine 24 (1994): 67–76.

- Rietveld S, Oud M, Dooijes EH. Classification of asthmatic breath sounds: preliminary results of the classifying capacity of human examiners versus artificial neural networks. Computers and biomedical research, an international journal 32 (1999): 440–448.

- Z, D, T, O. Classification of respiratory sounds by using an artificial neural network. International Journal of Pattern Recognition and Artificial Intelligence 17 (2003): 567–580

- Kandaswamy A, Kumar CS, Ramanathan RP, Jayaraman S, Malmurugan N. Neural classification of lung sounds using wavelet coefficients. Com- puters in Biology and Medicine 34 (2004): 523–537.

- Martinez-Hernandez HG, Aljama-Corrales CT, Gonzalez-Camarena R, Charleston-Villalobos VS, Chi-Lem G. Computerized classification of normal and abnormal lung sounds by multivariate linear autoregressive model. Confer- ence proceedings... Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Conference 6 (2005): 5999–6002.

- Mayorga P, Druzgalski C, Gonzalez OH, Lopez HS. Modified classifica- tion of normal lung sounds applying Quantile vectors. In: Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biol- ogy Society, EMBS (2012): 4262–4265.

- Abbasi S, Derakhshanfar R, Abbasi A, Sarbaz Y. Classification of normal and abnormal lung sounds using neural network and support vector machines. 2013 21st Iranian Conference on Electrical Engineering (ICEE) (2013): 1–4.

- Abushakra A, Faezipour M, Abumunshar A. Efficient frequency-based clas- sification of respiratory movements. 2012 IEEE International Conference on Electro/Information Technology (2012): 1–5.

- Waitman LR, Clarkson KP, Barwise Ja, King PH. Representation and classification of breath sounds recorded in an intensive care setting using neural networks. Journal of Clinical Monitoring and Computing 16 (2000): 95–105.

- Lu X, Bahoura M. An automatic system for crackles detection and classifi- cation. In: Canadian Conference on Electrical and Computer Engineering, pp (2007): 725–729.

- Bahoura M, Pelletier C. Respiratory sounds classification using cepstral analysis and Gaussian mixture models. Conference proceedings: ... Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Conference 1 (2004): 9–12.

- Kahya YP, Cini U, Cerid O. Real-time regional respiratory sound diagnosis instrument. Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE Cat. No.03CH37439) 4 (2003): 3098– 3101.

- Polat H, Guler I. A simple computer-based measurement and analysis system of pulmonary auscultation sounds. Journal of Medical Systems 28 (2004): 665–672.

- Aydore S, Sen I, Kahya YP, Kivanc Mihcak M. Classification of respira- tory signals by linear analysis. In: Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society: Engi- neering the Future of Biomedicine, EMBC (2009): 2617–2620.

- Matsunaga S, Yamauchi K, Yamashita M, Miyahara S. Classification between normal and abnormal respiratory sounds based on maximum likelihood approach. 2009 IEEE International Conference on Acoustics, Speech and Signal Processing (2009).

- Sen I, Kahya YP. A multi-channel device for respiratory sound data acqui- sition and transient detection. Conference proceedings: ... Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Conference 6 (2005): 6658–6661.

- Fiz JA, Jan R, Lozano M, Gmez R, Ruiz J. Detecting unilateral phrenic paralysis by acoustic respiratory analysis. PLoS ONE 9 (2014).

- Pasterkamp H, Kraman SS, Wodicka GR. Respiratory sounds: Advances beyond the stethoscope (1997).

- Sen I, Saraclar M, Kahya YP. A Comparison of SVM and GMM-Based Classifier Configurations for Diagnostic Classification of Pulmonary Sounds. IEEE transactions on bio-medical engineering 62 (2015): 1768–76.

- Al-Naggar NQ. A new method of lung sounds filtering using modulated least mean square—Adaptive noise cancellation. Journal of Biomedical Science and Engineering 6 (2013): 869–876.

- Welsby PD, Parry G, Smith D. The stethoscope: some preliminary investigations. Postgraduate medical journal 79 (2003): 695–8.

- Sello S, Strambi S, De Michele G, Ambrosino N. Respiratory sound analy- sis in healthy and pathological subjects: A wavelet approach. Biomedical Signal Processing and Control 3 (2008): 181–191.

Impact Factor: * 6.2

Impact Factor: * 6.2 Acceptance Rate: 76.33%

Acceptance Rate: 76.33%  Time to first decision: 10.4 days

Time to first decision: 10.4 days  Time from article received to acceptance: 2-3 weeks

Time from article received to acceptance: 2-3 weeks